Globally Aligned Spatial Distribution (GASD) descriptors

This document describes the Globally Aligned Spatial Distribution ([GASD]) global descriptor to be used for efficient object recognition and pose estimation.

GASD is based on the estimation of a reference frame for the whole point cloud that represents an object instance, which is used for aligning it with the canonical coordinate system. After that, a descriptor is computed for the aligned point cloud based on how its 3D points are spatially distributed. Such descriptor may also be extended with color distribution throughout the aligned point cloud. The global alignment transforms of matched point clouds are used for computing object pose. For more information please see [GASD].

Theoretical primer

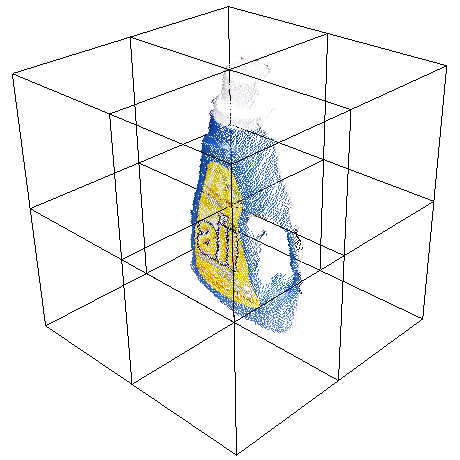

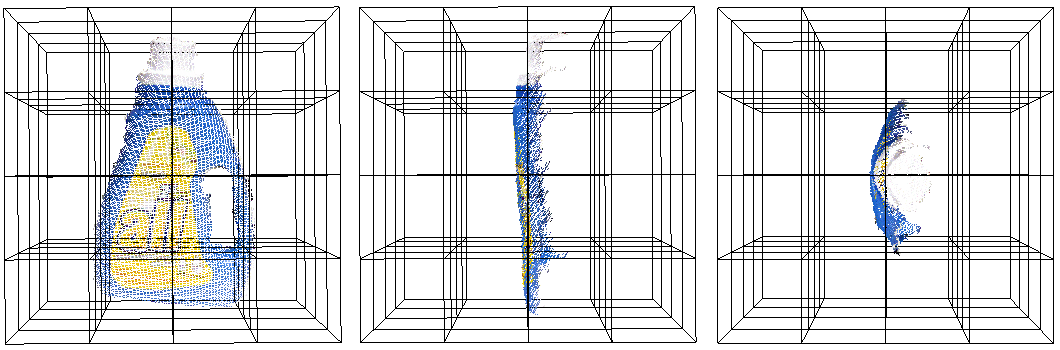

The Globally Aligned Spatial Distribution (or GASD) global description method takes as input a 3D point cloud that represents a partial view of a given object. The first step consists in estimating a reference frame for the point cloud, which allows the computation of a transform that aligns it to the canonical coordinate system, making the descriptor pose invariant. After alignment, a shape descriptor is computed for the point cloud based on the spatial distribution of the 3D points. Color distribution along the point cloud can also be taken into account for obtaining a shape and color descriptor with a higher discriminative power. Object recognition is then performed by matching query and train descriptors of partial views. The pose of each recognized object is also computed from the alignment transforms of matched query and train partial views.

The reference frame is estimated using a Principal Component Analysis (PCA) approach. Given a set of 3D points  that represents a partial view of an object, with

that represents a partial view of an object, with  , the first step consists in computing their centroid

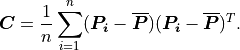

, the first step consists in computing their centroid  , which is the origin of the reference frame. Then a covariance matrix

, which is the origin of the reference frame. Then a covariance matrix  is computed from

is computed from  and

and  as follows:

as follows:

After that, the eigenvalues  and corresponding eigenvectors

and corresponding eigenvectors  of

of  are obtained, with

are obtained, with  , such that

, such that  . Considering that the eigenvalues are arranged in ascending order, the eigenvector

. Considering that the eigenvalues are arranged in ascending order, the eigenvector  associated with the minimal eigenvalue is used as the

associated with the minimal eigenvalue is used as the  axis of the reference frame. If the angle between

axis of the reference frame. If the angle between  and the viewing direction is in the

and the viewing direction is in the ![[-90^{\circ}, 90^{\circ}]](_images/math/23804580f4c5dddff893a3565211175aa4376e13.png) range, then

range, then  is negated. This ensures that the

is negated. This ensures that the  axis always points towards the viewer. The

axis always points towards the viewer. The  axis of the reference frame is the eigenvector

axis of the reference frame is the eigenvector  associated with the maximal eigenvalue. The

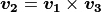

associated with the maximal eigenvalue. The  axis is given by

axis is given by  .

.

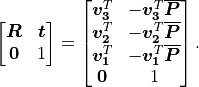

From the reference frame, it is possible to compute a transform ![[\boldsymbol{R} | \boldsymbol{t}]](_images/math/b20f608f30df465a3e8d82b9dcb46d442ff1c4d5.png) that aligns it with the canonical coordinate system. All the points

that aligns it with the canonical coordinate system. All the points  of the partial view are then transformed with

of the partial view are then transformed with ![[\boldsymbol{R} | \boldsymbol{t}]](_images/math/b20f608f30df465a3e8d82b9dcb46d442ff1c4d5.png) , which is defined as follows:

, which is defined as follows:

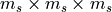

Once the point cloud is aligned using the reference frame, a pose invariant global shape descriptor can be computed from it. The point cloud axis-aligned bounding cube centered on the origin is divided into an  regular grid. For each grid cell, a histogram with

regular grid. For each grid cell, a histogram with  bins is computed. If

bins is computed. If  , then each histogram bin will store the number of points that belong to its correspondent cell in the 3D regular grid. If

, then each histogram bin will store the number of points that belong to its correspondent cell in the 3D regular grid. If  , then for each cell it will be computed a histogram of normalized distances between each sample and the cloud centroid.

, then for each cell it will be computed a histogram of normalized distances between each sample and the cloud centroid.

The contribution of each sample to the histogram is normalized with respect to the total number of points in the cloud. Optionally, interpolation may be used to distribute the value of each sample into adjacent cells, in an attempt to avoid boundary effects that may cause abrupt changes to the histogram when a sample shifts from being within one cell to another. The descriptor is then obtained by concatenating the computed histograms.

Color information can also be incorporated to the descriptor in order to increase its discriminative power. The color component of the descriptor is computed with an  grid similar to the one used for the shape component, but a color histogram is generated for each cell based on the colors of the points that belong to it. Point cloud color is represented in the HSV space and the hue values are accumulated in histograms with

grid similar to the one used for the shape component, but a color histogram is generated for each cell based on the colors of the points that belong to it. Point cloud color is represented in the HSV space and the hue values are accumulated in histograms with  bins. Similarly to shape component computation, normalization with respect to number of points is performed. Additionally, interpolation of histograms samples may also be performed. The shape and color components are concatenated, resulting in the final descriptor.

bins. Similarly to shape component computation, normalization with respect to number of points is performed. Additionally, interpolation of histograms samples may also be performed. The shape and color components are concatenated, resulting in the final descriptor.

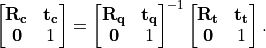

Query and train descriptors are matched using a nearest neighbor search approach. After that, for each matched object instance, a coarse pose is computed using the alignment transforms obtained from the reference frames of the respective query and train partial views. Given the transforms ![[\mathbf{R_{q}} | \mathbf{t_{q}}]](_images/math/4fa7bffeaf6ab646d697f941c6ea19455e13f73d.png) and

and ![[\mathbf{R_{t}} | \mathbf{t_{t}}]](_images/math/48eb833da2f2db0a14a6eb05a1f463a5a584e15a.png) that align the query and train partial views, respectively, the object coarse pose

that align the query and train partial views, respectively, the object coarse pose ![[\mathbf{R_{c}} | \mathbf{t_{c}}]](_images/math/d66b1fe5355dc1c87078925faddc64f1c10c4faf.png) is obtained by

is obtained by

The coarse pose ![[\mathbf{R_{c}} | \mathbf{t_{c}}]](_images/math/d66b1fe5355dc1c87078925faddc64f1c10c4faf.png) can then be refined using the Iterative Closest Point (ICP) algorithm.

can then be refined using the Iterative Closest Point (ICP) algorithm.

Estimating GASD features

The Globally Aligned Spatial Distribution is implemented in PCL as part of the pcl_features library.

The default values for color GASD parameters are:  (half size of 3),

(half size of 3),  ,

,  (half size of 2) and

(half size of 2) and  and no histogram interpolation (INTERP_NONE). This results in an array of 984 float values. These are stored in a pcl::GASDSignature984 point type. The default values for shape only GASD parameters are:

and no histogram interpolation (INTERP_NONE). This results in an array of 984 float values. These are stored in a pcl::GASDSignature984 point type. The default values for shape only GASD parameters are:  (half size of 4),

(half size of 4),  and trilinear histogram interpolation (INTERP_TRILINEAR). This results in an array of 512 float values, which may be stored in a pcl::GASDSignature512 point type. It is also possible to use quadrilinear histogram interpolation (INTERP_QUADRILINEAR).

and trilinear histogram interpolation (INTERP_TRILINEAR). This results in an array of 512 float values, which may be stored in a pcl::GASDSignature512 point type. It is also possible to use quadrilinear histogram interpolation (INTERP_QUADRILINEAR).

The following code snippet will estimate a GASD shape + color descriptor for an input colored point cloud.

1#include <pcl/point_types.h>

2#include <pcl/features/gasd.h>

3

4{

5 pcl::PointCloud<pcl::PointXYZRGBA>::Ptr cloud (new pcl::PointCloud<pcl::PointXYZRGBA>);

6

7 ... read, pass in or create a point cloud ...

8

9 // Create the GASD estimation class, and pass the input dataset to it

10 pcl::GASDColorEstimation<pcl::PointXYZRGBA, pcl::GASDSignature984> gasd;

11 gasd.setInputCloud (cloud);

12

13 // Output datasets

14 pcl::PointCloud<pcl::GASDSignature984> descriptor;

15

16 // Compute the descriptor

17 gasd.compute (descriptor);

18

19 // Get the alignment transform

20 Eigen::Matrix4f trans = gasd.getTransform ();

21

22 // Unpack histogram bins

23 for (std::size_t i = 0; i < std::size_t( descriptor[0].descriptorSize ()); ++i)

24 {

25 descriptor[0].histogram[i];

26 }

27}

The following code snippet will estimate a GASD shape only descriptor for an input point cloud.

1#include <pcl/point_types.h>

2#include <pcl/features/gasd.h>

3

4{

5 pcl::PointCloud<pcl::PointXYZ>::Ptr cloud (new pcl::PointCloud<pcl::PointXYZ>);

6

7 ... read, pass in or create a point cloud ...

8

9 // Create the GASD estimation class, and pass the input dataset to it

10 pcl::GASDEstimation<pcl::PointXYZ, pcl::GASDSignature512> gasd;

11 gasd.setInputCloud (cloud);

12

13 // Output datasets

14 pcl::PointCloud<pcl::GASDSignature512> descriptor;

15

16 // Compute the descriptor

17 gasd.compute (descriptor);

18

19 // Get the alignment transform

20 Eigen::Matrix4f trans = gasd.getTransform ();

21

22 // Unpack histogram bins

23 for (std::size_t i = 0; i < std::size_t( descriptor[0].descriptorSize ()); ++i)

24 {

25 descriptor[0].histogram[i];

26 }

27}

Note

@InProceedings{Lima16SIBGRAPI, author = {Joao Paulo Lima and Veronica Teichrieb}, title = {An Efficient Global Point Cloud Descriptor for Object Recognition and Pose Estimation}, booktitle = {Proceedings of the 29th SIBGRAPI - Conference on Graphics, Patterns and Images}, year = {2016}, address = {Sao Jose dos Campos, Brazil}, month = {October} }