Implicit Shape Model

In this tutorial we will learn how to use the implicit shape model algorithm implemented in the pcl::ism::ImplicitShapeModel class.

This algorithm was described in the article “Hough Transforms and 3D SURF for robust three dimensional classification” by Jan Knopp, Mukta Prasad, Geert Willems, Radu Timofte, and Luc Van Gool.

This algorithm is a combination of generalized Hough transform and the Bag of Features approach and its purpose is as follows. Having some training set - point clouds of different objects of the known class - the algorithm computes a certain model which will be later used to predict an object center in the given cloud that wasn’t a part of the training set.

Theoretical Primer

- The algorithm consists of two steps, the first one is training, and the second is recognition of the objects in the clouds that weren’t in the training set. Let’s take a look at how the training is done. It consists of six steps:

First of all the keypoint detection is made. In the given implementation it’s just a simplification of the training clouds. At this step all the point clouds are simplified by the means of the voxel grid approach; remaining points are declared as keypoints.

For every keypoint features are estimated. In the example below the FPFH estimation is used.

All features are clustered with the help of k-means algorithm to construct a dictionary of visual (or geometric) words. Obtained clusters represent visual words. Every feature in the cluster is the instance of this visual word.

For every single instance the direction to center is computed - a direction from the keypoint (from which the feature was obtained) to the center of mass of the given cloud.

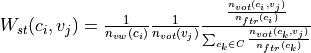

For each visual word the statistical weight is calculated by the formula:

The statistical weight

weights all the votes cast by visual word

weights all the votes cast by visual word  for class

for class  . Here

. Here  is the total number of votes from visual word

is the total number of votes from visual word  ,

,  is the number of votes for class

is the number of votes for class  from

from  ,

,  is the number of visual words that vote for class

is the number of visual words that vote for class  ,

,  is the number of features from which

is the number of features from which  was learned.

was learned.  is the set of all classes.

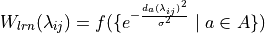

is the set of all classes.For every keypoint (point for which feature was estimated) the learned weight is calculated by the formula:

Authors of the article define

as the vote cast by a particular instance of visual word

as the vote cast by a particular instance of visual word  on a particular training shape of class

on a particular training shape of class  ; that is,

; that is,  records the distance of the particular instance of visual word

records the distance of the particular instance of visual word  to the center of the training shape on which it was found. Here

to the center of the training shape on which it was found. Here  is the set of all features associated with word

is the set of all features associated with word  on a shape of class

on a shape of class  . The recommend value for

. The recommend value for  is 10% of the shape size. Function

is 10% of the shape size. Function  is simply a median.

is simply a median.  is the Euclidean distance between voted and actual center.

is the Euclidean distance between voted and actual center.

- After the training process is done and the trained model (weights, directions etc.) is obtained, the process of object search (or recognition) takes place. It consists of next four steps:

Keypoint detection.

Feature estimation for every keypoint of the cloud.

For each feature the search for the nearest visual word (that is a cluster) in the dictionary is made.

For every feature

For every instance(which casts a vote for the class of interest) of every visual word from the trained model

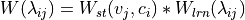

Add vote with the corresponding direction and vote power computed by the formula

Previous step gives us a set of directions to the expected center and the power for each vote. In order to get single point that corresponds to center these votes need to be analysed. For this purpose algorithm uses the non maxima suppression approach. User just needs to pass the radius of the object of interest and the rest will be done by the

ISMVoteList::findStrongestPeaks ()method.

For more comprehensive information please refer to the article “Hough Transforms and 3D SURF for robust three dimensional classification”.

The code

First of all you will need the set of point clouds for this tutorial - training set and set of clouds for recognition. Below is the list of clouds that are well suited for this tutorial (they were borrowed from the Ohio dataset).

- Clouds for training:

- Clouds for testing:

Next what you need to do is to create a file implicit_shape_model.cpp in any editor you prefer and copy the following code inside of it:

1#include <iostream>

2#include <pcl/io/pcd_io.h>

3#include <pcl/features/normal_3d.h>

4#include <pcl/features/feature.h>

5#include <pcl/visualization/cloud_viewer.h>

6#include <pcl/features/fpfh.h>

7#include <pcl/features/impl/fpfh.hpp>

8#include <pcl/recognition/implicit_shape_model.h>

9#include <pcl/recognition/impl/implicit_shape_model.hpp>

10

11int

12main (int argc, char** argv)

13{

14 if (argc < 5 || argc % 2 == 0) // needs at least one training cloud with class id, plus testing cloud with class id (plus name of executable)

15 return (-1);

16

17 unsigned int number_of_training_clouds = (argc - 3) / 2;

18

19 pcl::NormalEstimation<pcl::PointXYZ, pcl::Normal> normal_estimator;

20 normal_estimator.setRadiusSearch (25.0);

21

22 std::vector<pcl::PointCloud<pcl::PointXYZ>::Ptr> training_clouds;

23 std::vector<pcl::PointCloud<pcl::Normal>::Ptr> training_normals;

24 std::vector<unsigned int> training_classes;

25

26 for (unsigned int i_cloud = 0; i_cloud < number_of_training_clouds - 1; i_cloud++)

27 {

28 pcl::PointCloud<pcl::PointXYZ>::Ptr tr_cloud(new pcl::PointCloud<pcl::PointXYZ> ());

29 if ( pcl::io::loadPCDFile <pcl::PointXYZ> (argv[i_cloud * 2 + 1], *tr_cloud) == -1 )

30 return (-1);

31

32 pcl::PointCloud<pcl::Normal>::Ptr tr_normals (new pcl::PointCloud<pcl::Normal>);

33 normal_estimator.setInputCloud (tr_cloud);

34 normal_estimator.compute (*tr_normals);

35

36 unsigned int tr_class = static_cast<unsigned int> (strtol (argv[i_cloud * 2 + 2], 0, 10));

37

38 training_clouds.push_back (tr_cloud);

39 training_normals.push_back (tr_normals);

40 training_classes.push_back (tr_class);

41 }

42

43 pcl::FPFHEstimation<pcl::PointXYZ, pcl::Normal, pcl::Histogram<153> >::Ptr fpfh

44 (new pcl::FPFHEstimation<pcl::PointXYZ, pcl::Normal, pcl::Histogram<153> >);

45 fpfh->setRadiusSearch (30.0);

46 pcl::Feature< pcl::PointXYZ, pcl::Histogram<153> >::Ptr feature_estimator(fpfh);

47

48 pcl::ism::ImplicitShapeModelEstimation<153, pcl::PointXYZ, pcl::Normal> ism;

49 ism.setFeatureEstimator(feature_estimator);

50 ism.setTrainingClouds (training_clouds);

51 ism.setTrainingNormals (training_normals);

52 ism.setTrainingClasses (training_classes);

53 ism.setSamplingSize (2.0f);

54

55 pcl::ism::ImplicitShapeModelEstimation<153, pcl::PointXYZ, pcl::Normal>::ISMModelPtr model (new pcl::features::ISMModel);

56 ism.trainISM (model);

57

58 std::string file ("trained_ism_model.txt");

59 model->saveModelToFile (file);

60

61 model->loadModelFromfile (file);

62

63 unsigned int testing_class = static_cast<unsigned int> (strtol (argv[argc - 1], 0, 10));

64 pcl::PointCloud<pcl::PointXYZ>::Ptr testing_cloud (new pcl::PointCloud<pcl::PointXYZ> ());

65 if ( pcl::io::loadPCDFile <pcl::PointXYZ> (argv[argc - 2], *testing_cloud) == -1 )

66 return (-1);

67

68 pcl::PointCloud<pcl::Normal>::Ptr testing_normals (new pcl::PointCloud<pcl::Normal>);

69 normal_estimator.setInputCloud (testing_cloud);

70 normal_estimator.compute (*testing_normals);

71

72 pcl::features::ISMVoteList<pcl::PointXYZ>::Ptr vote_list = ism.findObjects (

73 model,

74 testing_cloud,

75 testing_normals,

76 testing_class);

77

78 double radius = model->sigmas_[testing_class] * 10.0;

79 double sigma = model->sigmas_[testing_class];

80 std::vector<pcl::ISMPeak, Eigen::aligned_allocator<pcl::ISMPeak> > strongest_peaks;

81 vote_list->findStrongestPeaks (strongest_peaks, testing_class, radius, sigma);

82

83 pcl::PointCloud <pcl::PointXYZRGB>::Ptr colored_cloud (new pcl::PointCloud<pcl::PointXYZRGB>);

84 colored_cloud->height = 0;

85 colored_cloud->width = 1;

86

87 pcl::PointXYZRGB point;

88 point.r = 255;

89 point.g = 255;

90 point.b = 255;

91

92 for (std::size_t i_point = 0; i_point < testing_cloud->size (); i_point++)

93 {

94 point.x = (*testing_cloud)[i_point].x;

95 point.y = (*testing_cloud)[i_point].y;

96 point.z = (*testing_cloud)[i_point].z;

97 colored_cloud->points.push_back (point);

98 }

99 colored_cloud->height += testing_cloud->size ();

100

101 point.r = 255;

102 point.g = 0;

103 point.b = 0;

104 for (std::size_t i_vote = 0; i_vote < strongest_peaks.size (); i_vote++)

105 {

106 point.x = strongest_peaks[i_vote].x;

107 point.y = strongest_peaks[i_vote].y;

108 point.z = strongest_peaks[i_vote].z;

109 colored_cloud->points.push_back (point);

110 }

111 colored_cloud->height += strongest_peaks.size ();

112

113 pcl::visualization::CloudViewer viewer ("Result viewer");

114 viewer.showCloud (colored_cloud);

115 while (!viewer.wasStopped ())

116 {

117 }

118

119 return (0);

120}

The explanation

Now let’s study out what is the purpose of this code. The first lines of interest are these:

for (unsigned int i_cloud = 0; i_cloud < number_of_training_clouds - 1; i_cloud++)

{

pcl::PointCloud<pcl::PointXYZ>::Ptr tr_cloud(new pcl::PointCloud<pcl::PointXYZ> ());

if ( pcl::io::loadPCDFile <pcl::PointXYZ> (argv[i_cloud * 2 + 1], *tr_cloud) == -1 )

return (-1);

pcl::PointCloud<pcl::Normal>::Ptr tr_normals (new pcl::PointCloud<pcl::Normal>);

normal_estimator.setInputCloud (tr_cloud);

normal_estimator.compute (*tr_normals);

unsigned int tr_class = static_cast<unsigned int> (strtol (argv[i_cloud * 2 + 2], 0, 10));

training_clouds.push_back (tr_cloud);

training_normals.push_back (tr_normals);

training_classes.push_back (tr_class);

}

These lines simply load the clouds that will be used for training. Algorithm requires normals so this is the place where they are computed. After the loop is passed all clouds will be inserted to the training_clouds vector. training_normals and training_classes will store normals and class index for the corresponding object.

pcl::FPFHEstimation<pcl::PointXYZ, pcl::Normal, pcl::Histogram<153> >::Ptr fpfh

(new pcl::FPFHEstimation<pcl::PointXYZ, pcl::Normal, pcl::Histogram<153> >);

fpfh->setRadiusSearch (30.0);

pcl::Feature< pcl::PointXYZ, pcl::Histogram<153> >::Ptr feature_estimator(fpfh);

Here the instance of feature estimator is created, in our case it is the FPFH. It must be fully set up before it will be passed to the ISM algorithm. So this is the place where we define all feature estimation settings.

pcl::ism::ImplicitShapeModelEstimation<153, pcl::PointXYZ, pcl::Normal> ism;

This line simply creates an instance of the pcl::ism::ImplicitShapeModelEstimation. It is a template class that has three parameters:

FeatureSize - size of the features (histograms) to compute

PointT - type of points to work with

NormalT - type of normals to use

ism.setFeatureEstimator(feature_estimator);

ism.setTrainingClouds (training_clouds);

ism.setTrainingNormals (training_normals);

ism.setTrainingClasses (training_classes);

ism.setSamplingSize (2.0f);

Here the instance is provided with the training data and feature estimator. The last line provides sampling size value used for cloud simplification as mentioned before.

pcl::ism::ImplicitShapeModelEstimation<153, pcl::PointXYZ, pcl::Normal>::ISMModelPtr model (new pcl::features::ISMModel);

ism.trainISM (model);

These lines simply launch the training process.

model->saveModelToFile (file);

Here the trained model that was obtained during the training process is saved to file for possible reuse.

The remaining part of the code may be moved with a few changes to another .cpp file and be presented as a separate program that is responsible for classification.

This line loads trained model from file. It is not necessary, because we already have the trained model. It is given to show how you can load the precomputed model.

pcl::PointCloud<pcl::PointXYZ>::Ptr testing_cloud (new pcl::PointCloud<pcl::PointXYZ> ());

if ( pcl::io::loadPCDFile <pcl::PointXYZ> (argv[argc - 2], *testing_cloud) == -1 )

return (-1);

pcl::PointCloud<pcl::Normal>::Ptr testing_normals (new pcl::PointCloud<pcl::Normal>);

normal_estimator.setInputCloud (testing_cloud);

normal_estimator.compute (*testing_normals);

The classification process needs the cloud and its normals as well as the training process. So these lines simply load the cloud and compute normals.

model,

testing_cloud,

testing_normals,

testing_class);

This line launches the classification process. It tells the algorithm to look for the objects of type testing_class in the given cloud testing_cloud. Notice that the algorithm will use any trained model that you will pass. After the classification is done, the list of votes for center will be returned. pcl::ism::ISMVoteList is the separate class, which purpose is to help you to analyze the votes.

double sigma = model->sigmas_[testing_class];

std::vector<pcl::ISMPeak, Eigen::aligned_allocator<pcl::ISMPeak> > strongest_peaks;

vote_list->findStrongestPeaks (strongest_peaks, testing_class, radius, sigma);

These lines are responsible for finding strongest peaks among the votes. This search is based on the non-maximum suppression idea, that’s why the non-maximum radius is equal to the object radius that is taken from the trained model.

The rest of the code is simple enough. It is responsible for visualizing the cloud and computed strongest peaks which represent the estimated centers of the object of type testing_class.

Compiling and running the program

Add the following lines to your CMakeLists.txt file:

1cmake_minimum_required(VERSION 3.5 FATAL_ERROR)

2

3project(implicit_shape_model)

4

5find_package(PCL 1.5 REQUIRED)

6

7set(CMAKE_BUILD_TYPE Release)

8include_directories(${PCL_INCLUDE_DIRS})

9link_directories(${PCL_LIBRARY_DIRS})

10add_definitions(${PCL_DEFINITIONS})

11

12add_executable (implicit_shape_model implicit_shape_model.cpp)

13target_link_libraries (implicit_shape_model ${PCL_LIBRARIES})

Note that here we tell the compiler that we want a release version of the binaries, because the process of training is too slow. After you have made the executable, you can run it. Simply do:

$ ./implicit_shape_model

ism_train_cat.pcd 0

ism_train_horse.pcd 1

ism_train_lioness.pcd 2

ism_train_michael.pcd 3

ism_train_wolf.pcd 4

ism_test_cat.pcd 0

Here you must pass the training clouds and the class of the object that it contains. The last two parameters are the cloud for testing and the class of interest that you are looking for in the testing cloud.

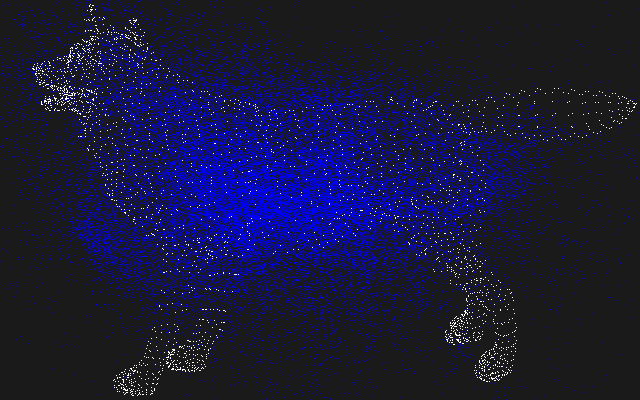

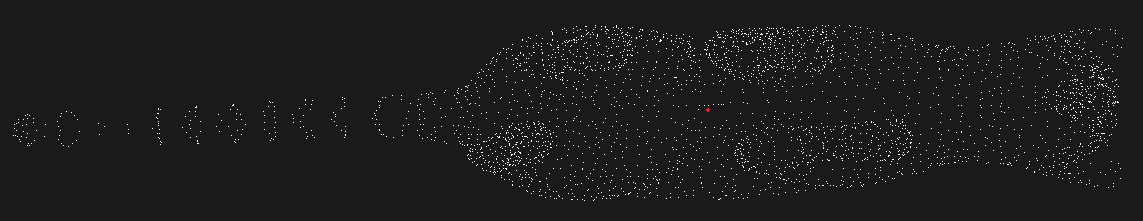

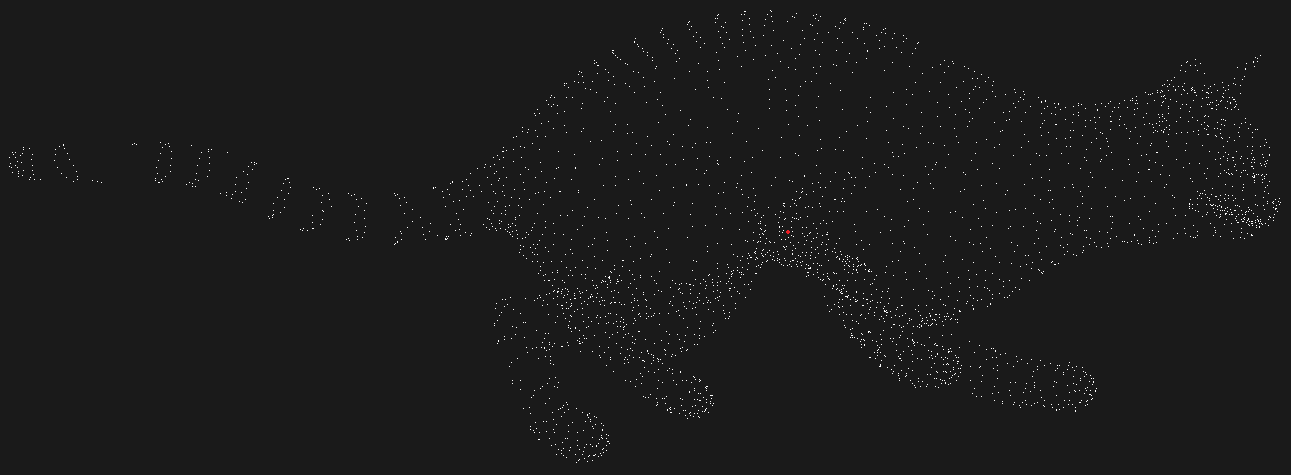

After the segmentation the cloud viewer window will be opened and you will see something similar to those images:

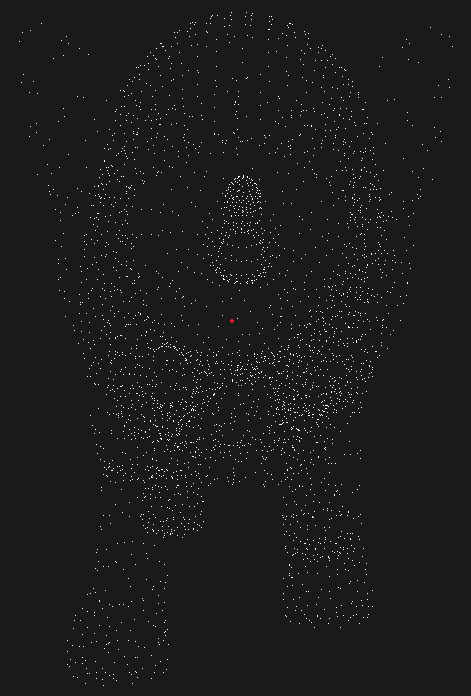

Here the red point represents the predicted center of the object that corresponds to the class of interest. If you will try to visualize the votes you will see something similar to this image where blue points are votes: